The Future of MCP: Why the Model Context Protocol Is Becoming the “USB-C for AI”

AI is entering a phase where raw model capabilities are no longer the main bottleneck. Instead, the real challenge lies in connecting models with the world around them—files, business systems, cloud infrastructure, analytics tools, devices, and everyday applications.

In late 2024, Anthropic introduced the Model Context Protocol (MCP): an open standard designed to unify how AI agents access external data and tools. And while MCP is still young, its trajectory suggests far more than another developer experiment. MCP hints at a future where AI systems operate not as isolated text predictors but as programmable agents seamlessly embedded in the user’s ecosystem.

Below, we explore MCP’s evolution, compare it with OpenAI’s tooling paradigm, review the emerging ecosystem, and examine why MCP could become one of the most influential shifts in AI integration in the coming years.

What MCP Actually Is—and Why It Matters

MCP is an open, interoperable protocol that defines a universal way for AI models to communicate with external systems using a client–server model.

Instead of writing custom connectors for every model and every integration—a problem known as the N×M fragmentation issue—MCP turns tools into simple, reusable “servers” that speak a shared language via JSON-RPC.

Think of MCP as standardizing the “plug” between AI and tools, the same way USB standardized connectivity between devices.

Why MCP Is Different

- Open & Interoperable: Any vendor can implement it. Any model can use it.

- Client–Server Architecture: Models act as clients; external capabilities are encapsulated in lightweight servers.

- Persistent Sessions: AI can maintain long-lived, stateful interactions with tools.

- Unified Interface: One integration works across many models.

The protocol emerged as a response to the growing isolation of large language models: powerful reasoning engines that remain blind to a company’s own data. By defining a shared access layer, MCP aims to bridge that gap.

Anthropic released MCP as an open standard along with SDKs (Python, TypeScript, Java) and several reference servers. Adoption was immediate: early supporters like Block, Apollo, Zed, Replit, Codeium, and Sourcegraph showcased practical use cases. Within a few months, the community created hundreds of compatible MCP servers—one of the fastest-growing open AI integration ecosystems to date.

This rapid expansion underscores a key trend: tools, not models, will define next-generation AI experiences.

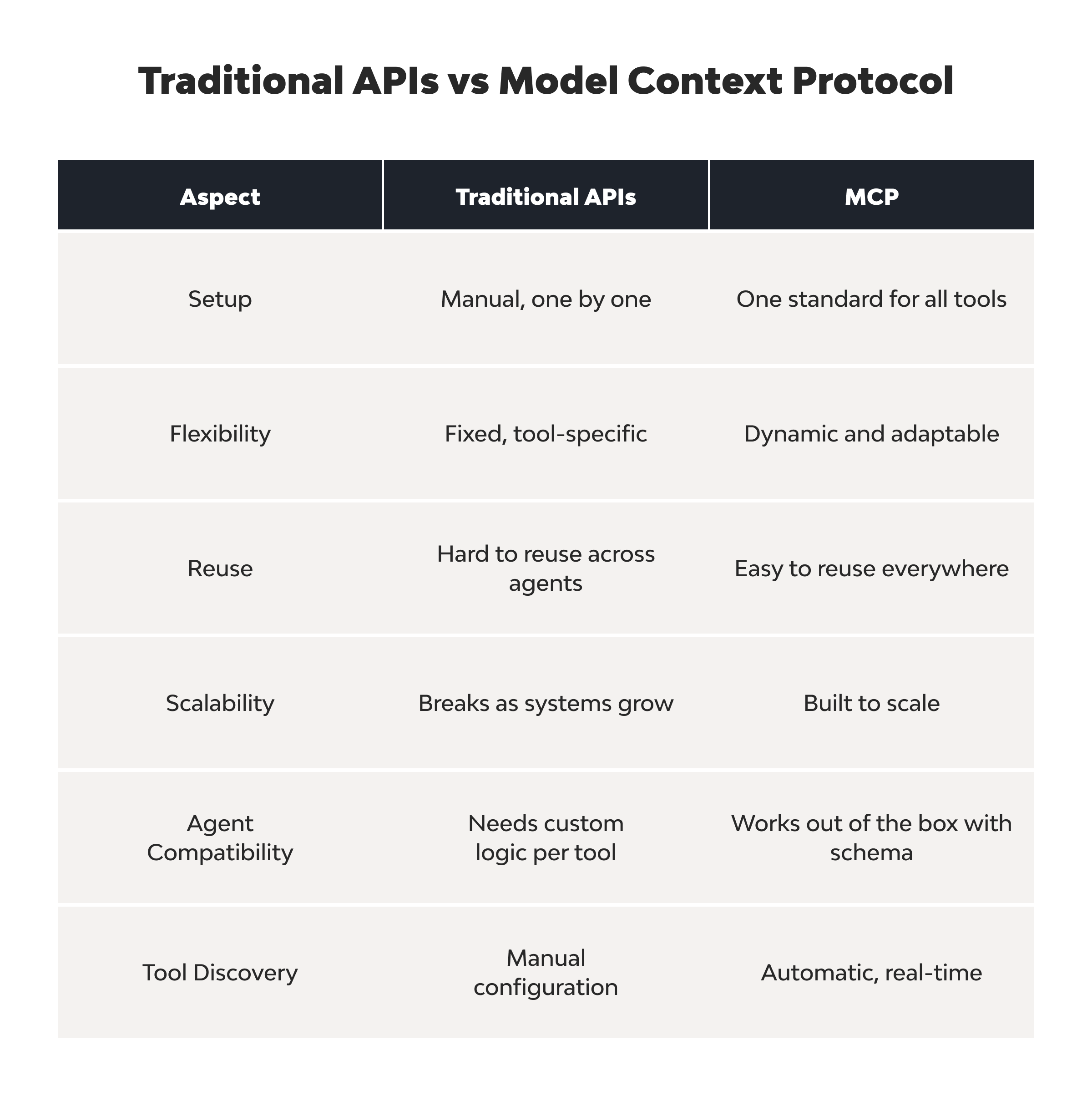

MCP vs. OpenAI Tools: Two Visions for How AI Should Use External Capabilities

OpenAI pioneered the idea of model-driven tool invocation with plugins and function calling. MCP takes a fundamentally different path.

Below is a strategic comparison—not merely on features, but on philosophy.

1. Openness vs. Platform Lock-In

- OpenAI Tools

Proprietary, tied to ChatGPT and the OpenAI execution stack. - MCP

Vendor-neutral, open, and portable across ecosystems.

This difference alone has enormous implications: MCP can evolve into an industry standard, whereas OpenAI’s approach remains vertically integrated.

2. One Integration vs. Fragmented Integrations

OpenAI plugins are purpose-built for GPT models. MCP servers are reusable across Claude, GPT, local models, or custom agent frameworks.

Write once. Use everywhere.

This is why the dev community embraced MCP so quickly—it kills redundant engineering.

3. Stateless API Calls vs. Persistent, Contextual Sessions

OpenAI function calls execute inside a single request.

MCP maintains a persistent channel, enabling:

- streaming updates,

- internal tool state,

- multi-step workflows,

- agentic behavior without re-authentication loops.

This makes MCP better suited for complex automations and agent frameworks.

4. Roadmaps Reflect Different Philosophies

OpenAI focuses on increasingly capable models. Anthropic invests in infrastructure, workflows, and agent interfaces. These contrasting strategies are shaping two diverging ecosystems: model-centric vs. tool-centric AI.

Top 10 Emerging Open-Source MCP Servers (2025)

The MCP ecosystem is evolving at breakneck speed. Below are ten of the most widely adopted community servers, showcasing the breadth of what MCP enables.

1. File System Server

Read, write, organize, or manipulate local files.

Why it matters: AI becomes a true operating-system assistant—automating backups, organizing media, or preparing project directories.

2. GitHub Server

Navigate repos, search code, open PRs, or propose changes.

Why it matters: It’s effectively a junior dev who can inspect your codebase and take action.

3. Slack Server

Access channels, send messages, read discussions.

Why it matters: Turns AI into a communication layer for daily standups, announcements, or information retrieval.

4. Google Maps Server

Perform geospatial queries and route planning.

Why it matters: Enables location-aware assistants, logistics support, and travel planning.

5. Brave Search Server

Real-time web search capabilities.

Why it matters: Bridges the gap between model knowledge and current events without browser plugins.

6. Bluesky Server

Post content, read timelines, and analyze trends.

Why it matters: Ideal for creators, community managers, and automated content workflows.

7. PostgreSQL Server

Safe (often read-only) SQL access for structured data.

Why it matters: Non-technical users can query databases conversationally.

8. Cloudflare Server

Manage deployments, DNS, CDN, Workers, and more.

Why it matters: Connects AI directly to edge infrastructure—DevOps automation becomes natural language-driven.

9. Raygun Server

Monitor app crashes and performance metrics.

Why it matters: Gives AI observability capabilities: instant error insights without digging through logs.

10. Vector Search Server

Semantic memory for embeddings-based retrieval.

Why it matters: Enables agents with true long-term memory, research capabilities, and knowledge indexing.

This diversity shows how MCP is becoming an integration fabric—not for one model, but for the entire AI ecosystem.

Top 3 Commercial MCP Implementations

Commercial tools are already using MCP as a foundational integration layer.

1. Claude Desktop

A flagship reference implementation. Users can attach servers directly to Claude, transforming it from a chatbot into a programmable OS-level assistant.

2. Sourcegraph Cody (Enterprise MCP)

Cody uses MCP to reach multiple internal systems—code search, repos, documentation—serving engineers with real contextual intelligence.

3. EnConvo AI Launcher

A consumer-facing implementation for macOS, combining >150 tools with MCP extensibility. A glimpse into how MCP can power everyday user workflows.

What MCP Unlocks Next: Beyond Developer Tools

While the current narrative focuses on dev-centric use cases, MCP’s impact may reach far beyond engineering.

1. Personal AI Operating Systems

Imagine a unified assistant that natively integrates with email, calendars, note apps, home automation, ticketing systems, cloud storage, and browser sessions.

All through a standardized access layer rather than brittle integrations.

2. Enterprise AI without the Glue Code

MCP could unify HR systems, ERPs, CRMs, ITSM tools, analytics platforms

One AI agent querying them all through a secure, standardized protocol.

3. Next-Generation EdTech, HealthTech, and Creative Apps

Standardized access means an AI tutor or AI designer can:

- fetch academic materials,

- retrieve medical records (with permissions),

- manipulate creative software,

- run simulations,

- interact with real devices.

MCP could become the “AI middleware” layer for entire industries.

4. The Emergence of “Protocol Thinking”

Just as HTTP standardized web services and USB standardized hardware, MCP introduces the idea that AI tools should follow protocol—not platform—semantics.

We could see:

- multiple MCP-like standards coexisting,

- domain-specific extensions,

- “AI app stores” built around open protocols instead of walled gardens.

Barriers to Adoption: Why MCP Isn’t Mainstream Yet

Despite its promise, MCP must overcome key challenges:

- Developer-Heavy Setup—Running servers, configuring transports, managing versions—still far from a one-click experience.

- No Mature Remote Connectivity—Current implementations focus on local connections. Enterprise-grade security, authentication, and remote tooling are still in development.

- Security Uncertainty—Granting AI “tool access” is inherently risky. Principles for secure sandboxing, permissioning, and auditing require further standardization.

- High Learning Curve—MCP introduces new abstractions: tools, resources, transports, prompts, and sessions. Teams must invest in training and architectural alignment.

- Industry Fragmentation—Adoption depends heavily on support from OpenAI, Google, and Microsoft. If they promote competing protocols, the ecosystem may splinter.

These issues explain why MCP remains powerful but niche—a tool beloved by engineers but not yet ready for mass adoption.

Conclusion: The Dawn of a Unified AI Integration Layer

MCP is more than just another protocol. It is a structural shift toward AI as an extensible, interoperable runtime, not a closed prediction engine.

If the ecosystem continues to mature—simpler tooling, robust remote access, hardened security, and broader vendor support—MCP could easily become the universal standard for connecting AI to the world.

The same way USB unified hardware and HTTP unified the web, MCP has a real chance to unify AI–tool interactions across vendors, industries, and devices.

2025 will be a pivotal year: either MCP becomes the backbone of AI-powered software… or it inspires the next wave of open standards that will.