Testing Requirements: How to Get Good Ones and How to Deal With Bad Ones

Every day, all we QAs do is test. APIs, user interfaces, cross-browser compatibility, business logic, etc. To know whether a product works the way it’s supposed to, we need to know the requirements.

But the sad truth is that the life of an average engineer consists of developing and testing work with badly formulated requirements – if there are any at all. This way, engineers in software quality assurance are forced to work with incomplete, old, or and – let’s be honest – bad documentation.

The worst thing about this situation is the fact that everyone talks about testing requirements, but no one really talks about how to do it.

Being one of those engineers, I decided to share my views on requirements, how to test them, how to know they’re good, and what to do if they’re bad.

Definition of Requirements

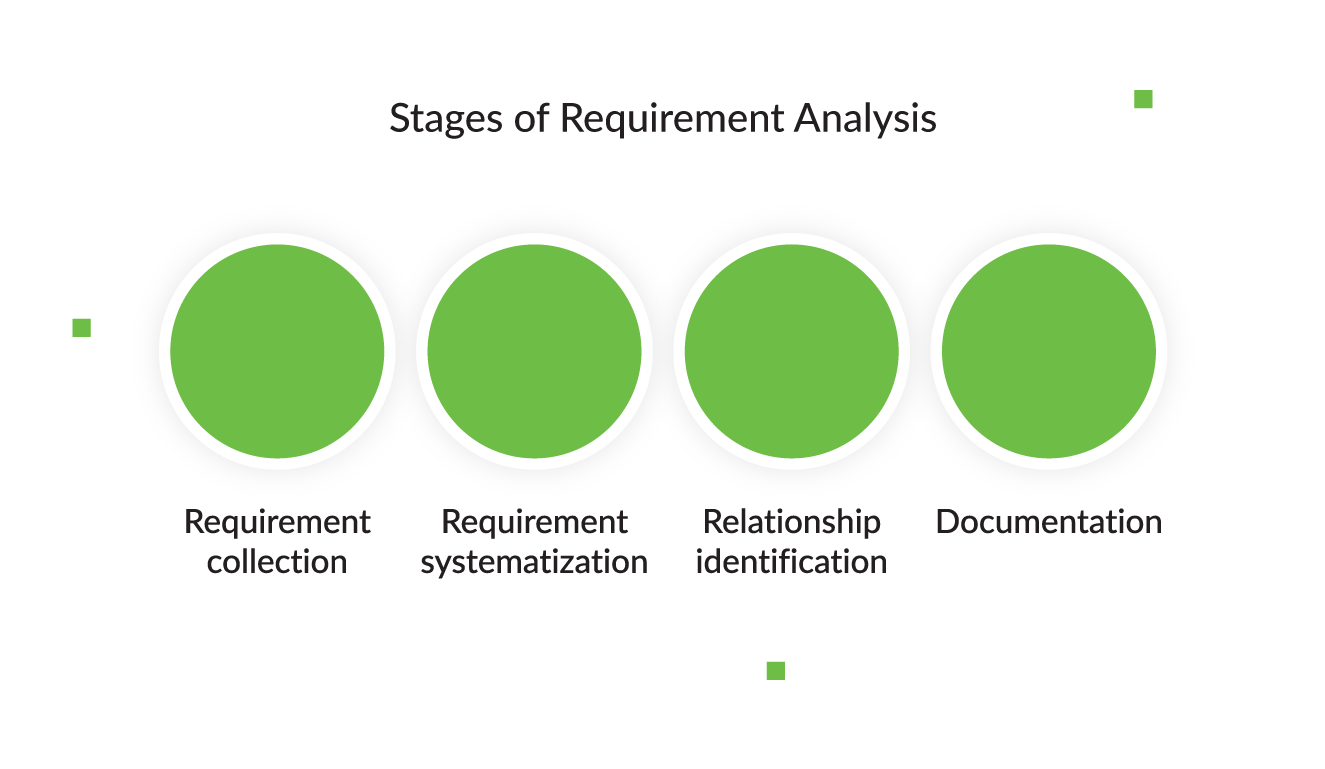

Let’s start with the basics. It’s expected that web development companies will get detailed and structured requirements for product development from the client. But if we don’t always get good product requirements, maybe it’s just not clear to everyone what they actually are. The basic definition of product requirements is a set of statements that describe a product’s or a system’s features and realization. Requirement analysis in the QA process is, in turn, a part of the software development process, and includes:

The requirement analysis in testing is very important, so it should not be neglected in the development process.

Types of Requirements for QA Testing

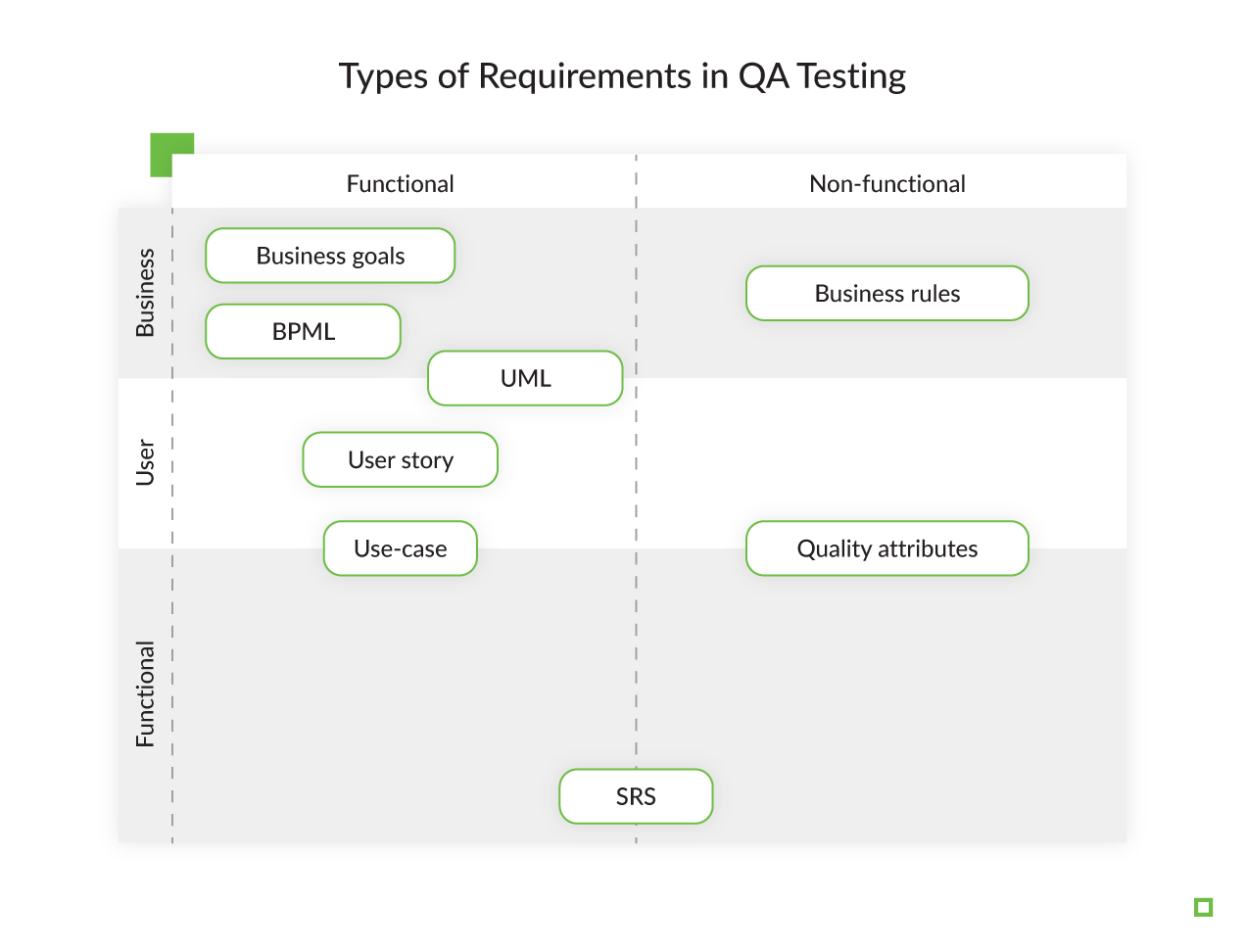

To be absolutely precise, there are several types of requirements in the QA Python testing process. We can sort them by level (functional, user, business) and by attribute (functional, non-functional).

I won’t go into detail on these types, since you either know all about them already or can Google them in a blink of an eye. So let’s talk about the type of requirements that no one has yet attempted to properly describe and fix – the bad requirements. Obviously, requirements listed by the client during a video call while eating lunch, through comments on Jira, Slack messages, or the hand-written notes of one of the team members are some of them.

Characteristics of Requirements

But wait – does that mean that a story on Jira or a long text on Confluence automatically make requirements solid and well-written? Well, yes, as long as they exhibit certain features. My software development team and I use the following list, since in my opinion it’s perfect not just for software quality testing, but for working on any project with any kind of client-vendor combination.

| Feature | Description |

| Single responsibility | A requirement describes one thing, and one thing only. |

| Completeness | A requirement is fully laid out in one spot, and all the necessary information is present. |

| Consistency | A requirement does not contradict other requirements and fully complies with external documentation. |

| Atomicity | A requirement can’t be divided into several more detailed requirements without losing completeness. |

| Traceability | A requirement fully or partially complies with business needs as stated by the stakeholders and is documented. |

| Relevance | A requirement hasn’t become obsolete after time has passed. |

| Feasibility | A requirement can be realized within the given project. |

As I said, this is just a general list that can be applied to different projects. But if we take agile teams, user stories are the most popular type of requirement among agile teams. In this case, requirement quality can be easily described by using INVEST, an abbreviation that is simple and easy to remember:

| Characteristic | Characteristic | Explanation |

| I | Independent | A user story is self-contained, with no inherent dependencies on any other user stories. |

| N | Negotiable | User stories are not explicit, univocal contracts and should leave space for discussion and alterations. |

| V | Valuable | A user story must deliver functionality that is of value to the stakeholders. |

| E | Estimable | You must always be able to estimate the size of a user story. |

| S | Small | User stories shouldn’t be too big or too small, and it should be possible for a developer to complete within a single iteration. |

| T | Testable | The user story or its related description must provide the necessary information and ensure that development is complete and has been done correctly. |

Testing QA Requirements: How Do I Know They’re Sound?

A QA engineer’s main job is to ensure quality throughout the whole development process. Thus, it’s only fair to say that QA requirements development is a very important part of the job, as we’re all aware of how the cost of a bug correlates to the time spent on finding it. A good way to avoid this extra expense is to find bugs in advance by testing the requirements n software testing before the code is completed.

This seems obvious, but how exactly does one test the requirements? The answer has never been properly articulated, even though many specialists even list characteristics that should be checked.

At the beginning of my career, it was a very difficult subject, and everything I read or heard was pretty vague. Which is why, over time, I created a sort of check-list that allows me to see if a user story is a good one (i.e., suitable for development) or needs further analysis and elaboration.

| Check if... | |

| I | User story describes one part of the functionality. |

| User story has all the necessary information and doesn’t refer to other stories. | |

| All entities and objects of the user story are clear and defined in this story. | |

| User stories can be worked on in any order. | |

| A valuable user story can be implemented without completing other, much less valuable stories. | |

| N | User story is not an explicit contract for features. |

| The customer and programmer can co-create details during development. | |

| User story captures the essence, not the details. | |

| V | User story has value to the user. |

| All the items in the story comply with business needs. | |

| All items in the story are useful to the system user. | |

| The functionality described in the story is user-friendly. | |

| E | The size of a user story allows it to be completed in one sprint. |

| You can divide a story into tasks that can be estimated. | |

| Tasks in a story have enough information to be estimated. | |

| A task in a story can be estimated in no more than 1 day. | |

| User story should be easily estimated or sized, so it can be properly prioritized. | |

| S | User story can be fully implemented in one sprint (including testing). |

| User story isn’t too big to plan/task. | |

| User story isn’t too big to prioritize within a level of accuracy. | |

| A single story doesn’t take more than a half of an iteration. | |

| T | Acceptance criteria are present in the story. |

| Acceptance criteria are accurate and unambiguous. | |

| You can write a test for the user story. |

Bad Requirements Bring Risks

If you answered Yes to all of the above, congratulations! You’ve got yourself some accurate requirements. But what if you’ve tested the requirements and they turn out to be far from perfect? As a QA engineer, your task is to make the software quality-assurance process flow as smoothly as possible.

The next step is to show the Product Owner (client, team, and everyone involved in requirement development) the risks of bad requirements and explain how to create good QA requirements that are easy to work with and help developers create a well-functioning product.

| Feature | Risks |

| Single responsibility | A requirement describes one thing, and one thing only. What if it doesn’t? One requirement covers one story that includes several components developed by several engineers. If changes are required, it’s impossible to define theaffected area,since several people are responsible for one story. This means, more time will be spent on grooming sub-tasks for this particular story. As a result, you have: more time spent on one story by several team members An unknown number of bugs, since the affected area is undefinable. |

| Completeness | What happens if the requirement isn’t complete or the description isn’t stored in one place? For instance, there’s a requirement that goes “The `username` field can contain letters and numbers”. To complete a task like this, the developer needs to know what kinds of letters (Cyrillic, Latin, Arabic, etc.) and numbers (even or fractional numbers, Roman, etc.) can be accepted. After clarifying the requirements, the developer completes the task. But then the task moves on to the testing engineer, but he doesn’t have the exact requirements. It takes time for him to get the details as well.What happens if the requirement isn’t complete or the description isn’t stored in one place? As a result, you have: more time spent on one story by several team members A mismatch between the expected and actual result |

| Consistency | What if a requirement contradicts other requirements and doesn’t fully comply with external documentation? Let’s say the product the team is working on has a form to fill out. And suddenly there are two different contradictory tasks: one says it requires a 9-digit code, while the other states that the minimum number of characters is 10. As a result: If the tasks are completed by different people, and both requirements will be fulfilled, this will cause a bug, which will require some time to be fixed. If the tasks are to be completed by one person, there will be time wasted on clarification, which isn’t good either. |

| Atomicity | The risks of a requirement not being atomic (i.e., it still can be divided into more detailed requirements). A simple example is a requirement like “Develop a booking flow for apartments like the booking flow for villas in the previous version”. This is a requirement that can’t be divided into two independent parts. On top of that, links to old parts of the requirements can be confusing and misleading to the development team, increasing the time necessary to go through the documentation, as well as the number of bugs. Again, what you get is: Lost time Lost money |

| Traceability | If QA requirements can’t be traced to a business need, there’s a risk of a task being developed for nothing. Sometimes stories aren’t connected to a business needs or components, or aren’t present in the documentation. This means that there’s no way to understand their business value, and a task might be completed in a way that doesn’t fit the whole product and, as a result, is unusable. Which means you get: Time wasted on development Money wasted |

| Relevance | Sometimes clients or team members forget that a requirement can simply get old. For instance, the layout of buttons on a page should be changed. The analyst decided not to change the documentation, but instead asked the developer to do it via private message. The developer added the changes and marked the task as complete. But the tester didn’t know anything about this change and during the planned regression test decided it’s a defect. And since it was named a bug, another developer moved the buttons to the position defined by the documentation. As a result: The time of several team members wasted Final result doesn’t fit the expected outcome. |

| Feasibility | Some requirements can’t be completed the way the client would like to. Things like payments, for example can’t be instant. It’s a complicated process that consists of several stages that depend on working hours, third-party services etc.. Not communicating this properly with the client can cause misunderstandings, misleading deadlines and chaotic changes in sprints. |

How to Deal with Bad Requirements

Ideally, these should help you get better requirements for product development and make the QA process more easy and transparent. But, sadly, this doesn’t always happen. What do you do then? What should QAs do when they have tons of unstructured documentation pages, different versions of tasks, heaps of comments on Jira, and questions dispersed across a bunch of emails?

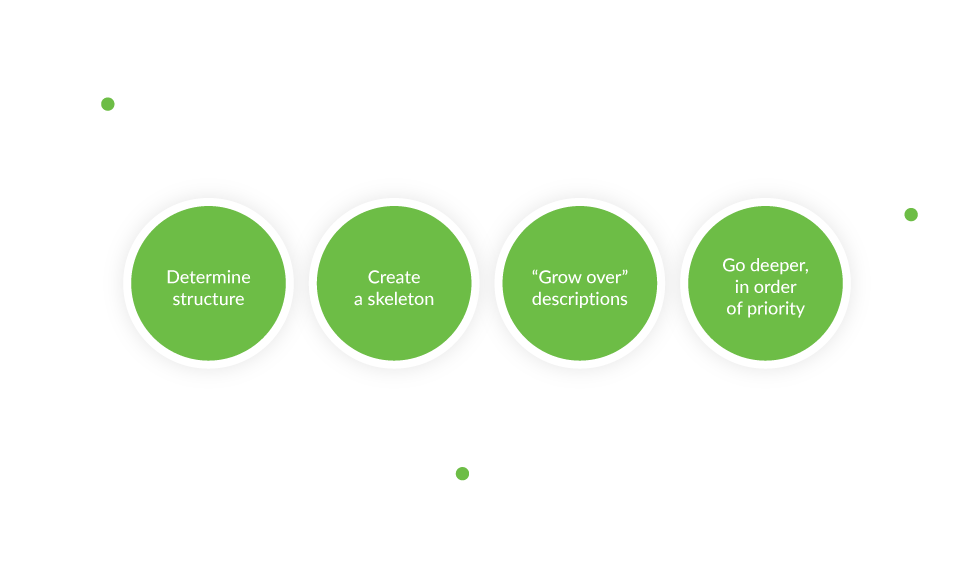

Some engineers have developed their own quality-assurance methodologies. Here’s a simple algorithm I developed and use myself. Basically, you just take everything apart and put it together the way it should be.

- Determine structure.

Write down a list of components and functions. Use your engineering skills to define connections, the dependencies between them, and their hierarchy. Ask yourself what goal you want to achieve with every component or feature, and how. - Create a skeleton.

It can be a UML diagram or a page map on Confluence including all the attachments, connections, and links. You should get a transparent structure that everyone can follow. - “Grow over” descriptions.

Use your skeleton to add some muscle – entity and functionality descriptions. - Go deeper, in order of priority.

Add necessary characteristics to your documentation, in order of priority. (Obviously, relevance has higher priority than traceability.)

When all the information is well structured, the holes in it are easier to see. This algorithm should allow you to see what information is present and what needs to be added to complete the puzzle.

As I’ve said, product requirements are often a pain point to everyone involved. To make a good product, all its requirements and functionality should be communicated well. Everyone should fully understand the goal they’re working towards and be on the same page about its value.

Sadly, it doesn’t always work this way. The worst thing about a lack of (or inadequate) requirements is that there’s no one way to solve the problem. Developers deal with it in different ways, and some of them don’t. This is why I decided to share my experience and help you navigate through this mess. I hope this article helps you identify the problem and find ways to either get the requirements you deserve, or at least deal with the so-so ones you have.

If you are looking for a reliable software vendor with developers who have participated in demanding projects and experienced QAs who are well-versed in all of the above nuances, contact the Django Stars team.

- Who is responsible for creating testing requirements?

- It’s expected that web development companies will get detailed and structured requirements for product development from the client. But if we don’t always get good product requirements, maybe it’s just not clear to everyone what they actually are.

- What is requirement analysis in the QA process?

- Requirement analysis in testing is an important part of the software development process. It includes the following:

- Requirement collection

- Requirement systematization

- Relationship identification

- Documentation

- Is it possible to test the product with bad testing requirements?

- To Deal with bad requirements, a QA engineer can take everything apart and put it together the way it should be. Here’s a simple algorithm that should allow you to see what information is present and what needs to be added to complete the puzzle:

- Determine structure.

- Create a skeleton (It can be a UML diagram or a page map on Confluence, including all the attachments, connections, and links).

- Add entity and functionality descriptions.

- Go deeper and add the necessary characteristics to your documentation in order of priority.

- Can the Django Stars team help me make up the testing requirements?

- The Django Stars team has over 13 years of experience developing software products in various industries. Our services cover all the software development life cycle stages, including the QA process. If you need help preparing testing requirements, you can discuss them with our QA specialists while working on your project.